Moving Providers and Tainted IPs

I recently switched hosting providers for the host you’re reading this on (more on the hows and whys in a later post, perhaps). Most of the “work” involved in moving stuff involved changing the main IP address as it obviously changed. Being in a shared hosting environment, one is assigned an IPv4 address at random which is likely to have been used in the past. My old instance had been running for about a decade on the same IP so had a decent reputation of not sending bad traffic around the world, but it quickly became clear I was not so lucky with the new host.

Some searching revealed it had also been on blacklists in the past for sending out spam. Not great!

(One may not really care about IP reputation in general - for me, as I’m using the host as the main nameserver for all my domains, it’s relatively important that it is available unimpeded globally so I can receive email and such.)

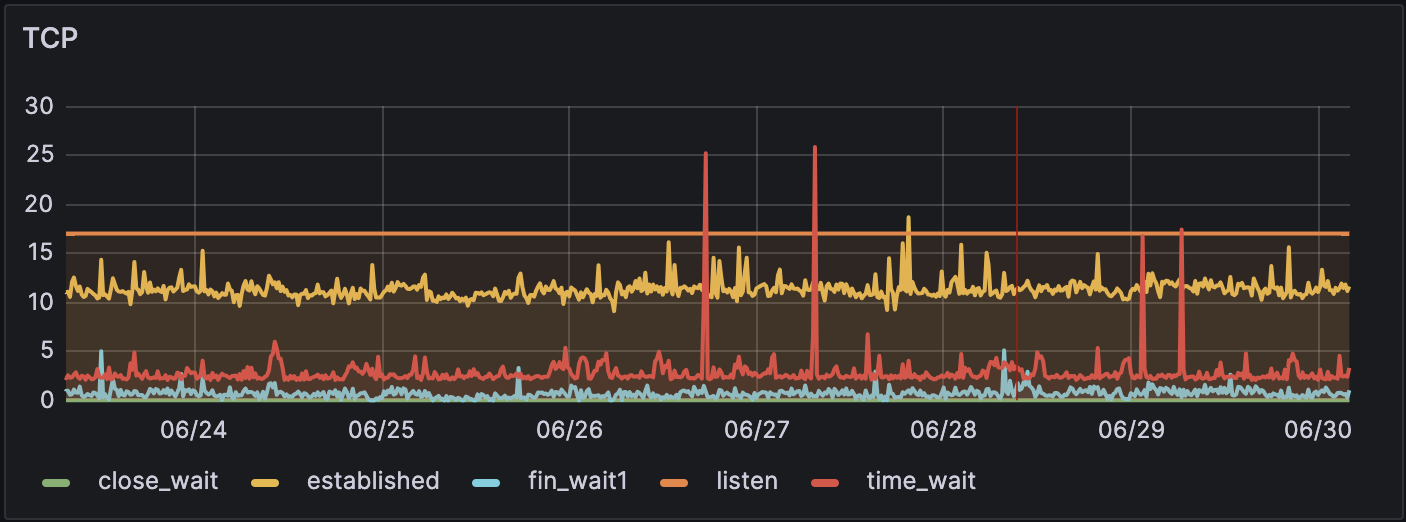

My standard server monitoring setup showed some pretty obvious changes in TCP connections, from a clean old server:

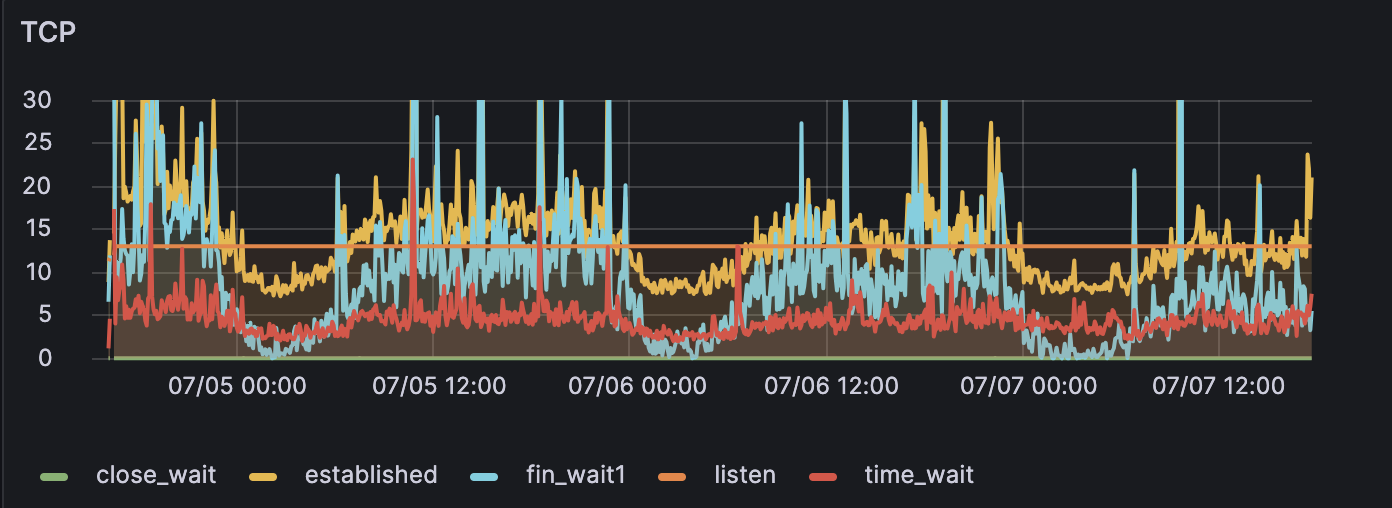

to a new “dirty” server:

Note the “wave” of TCP connections established and in FIN_WAIT state, a sure sign of a bunch of hosts trying (and failing) to get retrieve some data. Nothing major, but combined with the earlier blacklisting and this being the sole public IP used for all my domains it’s not ideal.

Solving this was pretty easy - just create a new instance and move everything to the new IP once again. My new provider made this a 10 minute process: make a snapshot of the current instance, fire up a new instance based on said snapshot, update the bind config and boom, new IP. While waiting the requisite 24 hours for the new DNS setting to propagate I got curious though - what was that bad traffic anyway? Turns out it was pretty easy to find out.

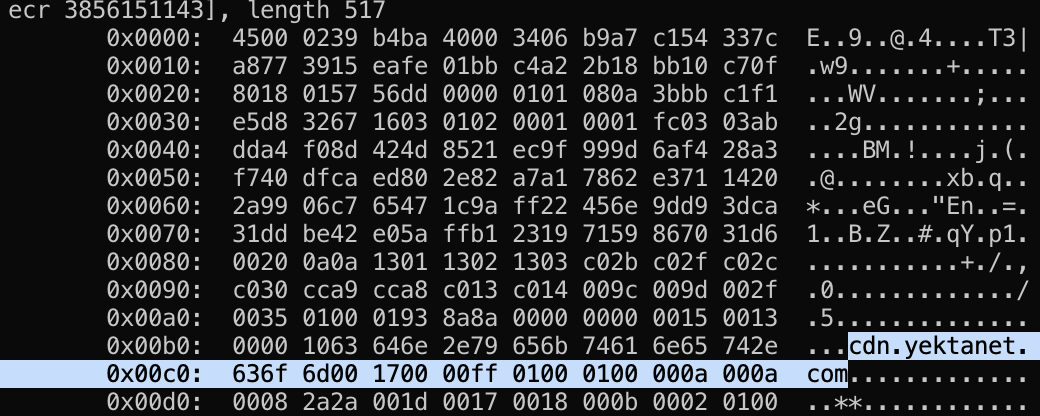

First I ran a quick tcpdump to see what kind of traffic I should be looking for. tcpdump -n port not ssh confirmed it was random traffic on port 443 (SSL), meaning this IP was used in the past to host a website. Fair enough. What website though? The nginx logs didn’t reveal anything interesting - presumably traffic wasn’t actually getting past SSL negotiation. Digging into that involved a slightly more involved tcpdump:

tcpdump -i any -s 1500 '(tcp[((tcp[12:1] & 0xf0) >> 2)+5:1] = 0x01) and (tcp[((tcp[12:1] & 0xf0) >> 2):1] = 0x16)' -nXSs0 -tt

This filters out the SNI field for the initial SSL handshake.

And sure enough, there it was:

Turns out it was used as part of an ad server CDN from Iran at some point.

The good news is that the newly assigned IP is clean as a whistle and the TCP graph looks pretty much like the first one here. DNS propagation will be complete in a few hours’ time and then I’ll shut down the old instance and will probably have the current clean IP for years to come. All’s well that ends well.